A few weeks ago, a colleague asked me how he should do a simple job in Azure. Googling is a quick way to get on WebJobs or Azure Batch, but is rarely suitable for a specific problem.

In our case, the job should retrieve some data from Microsoft CRM, filter/edit a few of the data, and write them away as CSV somewhere. So not a spectacular task, but very common. The problem lies in the nitty-gritty. Here are my answers to him and my views on the possibilities:

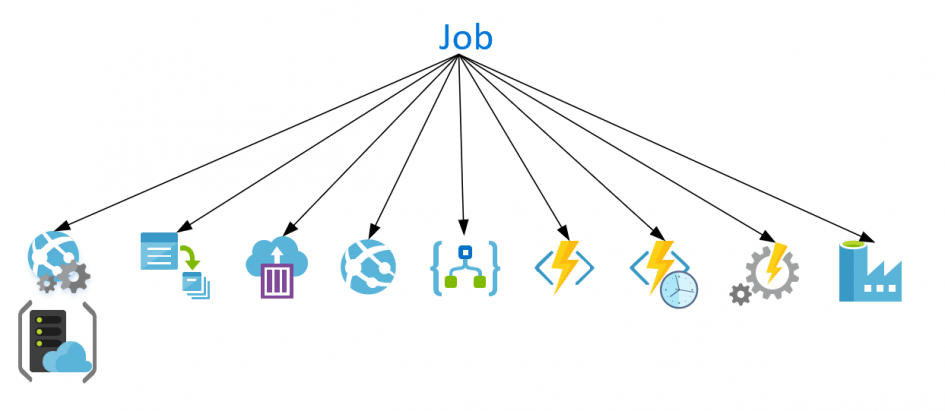

| Solution | Pro | Cons |

| WebJob | – well known – simple deploy cmd app | – always need a AppService and an AppService plan – no configuration change after upload |

| Batch | – focus on high performance computing – useful for image rendering | – expensive (starts VMs with dedicated graphic cards) |

| Container Instance | – lightweight – created on demand – all ways of implementations possible | – needs a second service for startup (like Azure Function, Runbook) – difficult to monitor |

| App Service | – managed webserver (Tomcat) – managed runtime (JAVA, .NET) – easy to use and well known | – no consumption plan, needs an AppService plan – needs a second service to call the app service one time each day – fixed timeout of 230 seconds, therefore you need to implement you job asynchronous |

| Logic Apps | – simple to configure and to setup (grafic UI) – endless scaling if needed – only costs when it runs | – each connector call must be payed (can be expensive) – not useful for complex code logic (should be separated as REST service) – no implementation (if you want to write your code in your prefered language) |

| Azure Function | – many supported languages (.NET, Node.js, Python, Java, PowerShell) – only costs when it runs – can start time triggert | – have a 10 minute timeout |

| Azure Durable Function | – have no timeout limit – needs asynchronous implementation and an orchestration function | – supports only C#, F# and JavaScript |

| Azure Automation/ Runbooks | – great management around (scheduling, monitoring, log collection) – easy to configure – you write the logic as script | – only PowerShell and Python is supported |

| Data Factory | – designed for transporting and orchestrating data – has many build in connectors – can run time triggered – can transform data – greate monitoring/logging included | – needs a little learning phase |

My personal recommendation would be to take a look at Data Factory. Most jobs just have to push data from left to right and changing some details – a simple exercise for Data Factory. Later, the Data Factory, can be used as a future data hub on a larger scale.

If the solution needs to be a self-implemented I would start with Azure (Durable) Functions. They can be developed and tested locally, and monitoring is now pretty good.

If the code already exists or requires really complex logic and more configuration and libraries, then I would pack the job into containers.

Whats your opinion?

Schreibe einen Kommentar